Google Releases Enhanced Gemini 2.5 Pro Preview with Major Performance Improvements

Note: This model supports enabling thinking mode (add -thinking suffix) and internet mode (add #search suffix).

Example: If the model code is abc, then the thinking model is abc-thinking, and the internet model is abc#search.

Google has announced the rollout of an upgraded preview version of its Gemini 2.5 Pro model, designated as version 06-05, which represents a significant advancement over previous iterations. This latest update is currently available in preview mode and is expected to reach general availability within the coming weeks.

Key Performance Enhancements

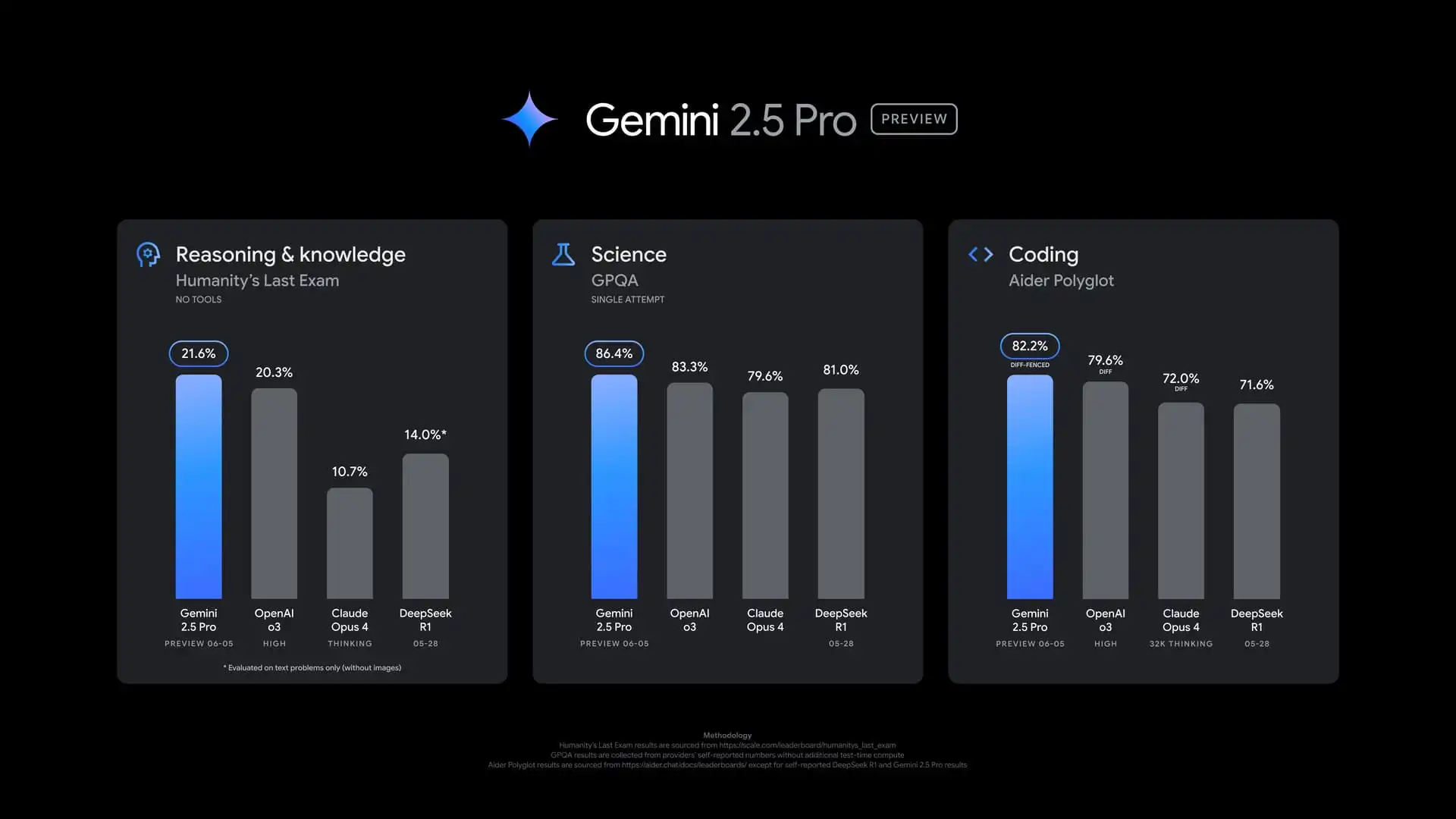

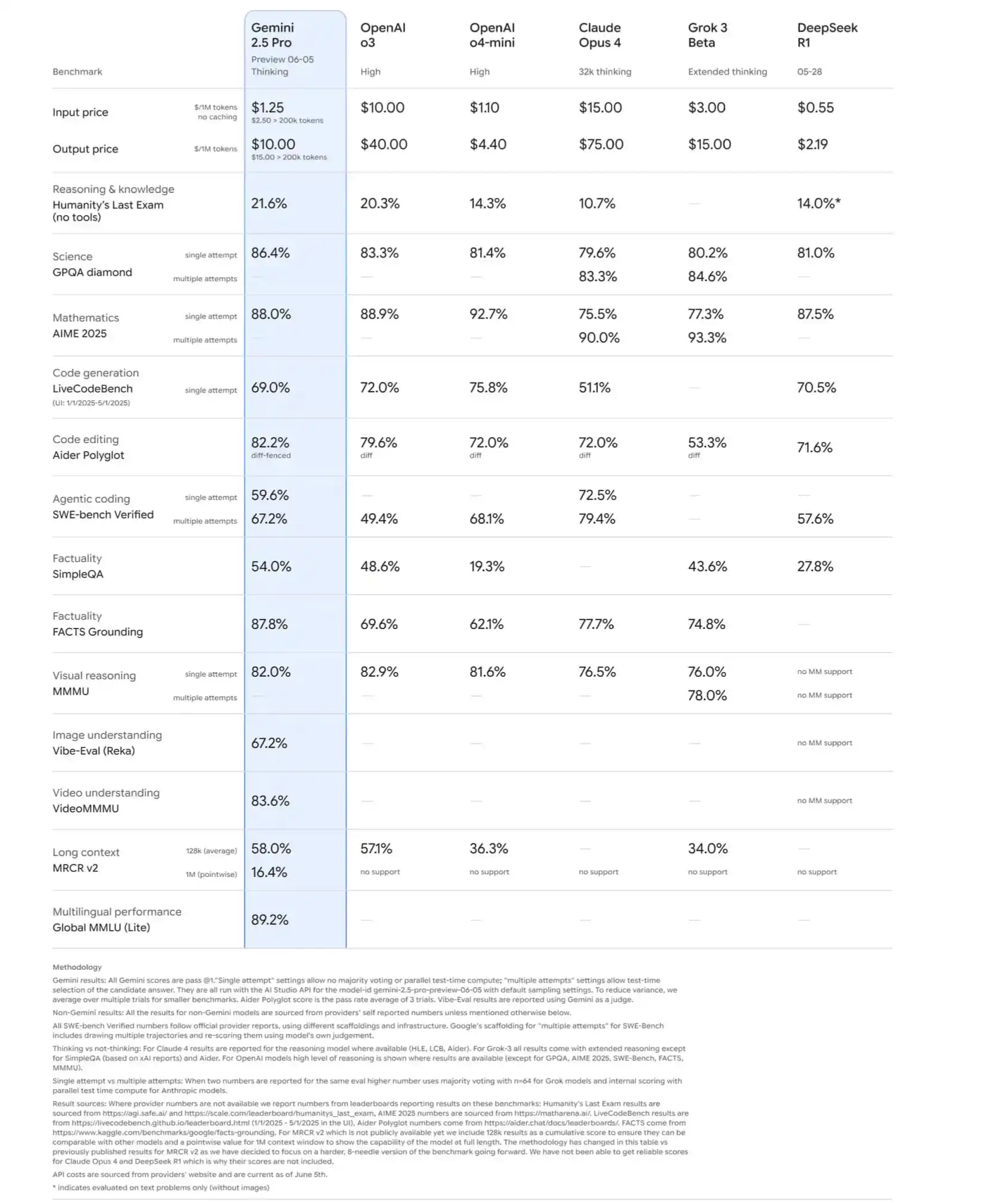

The new Gemini 2.5 Pro 06-05 builds upon the foundation established by the previous I/O Edition (05-06) released last month, which initially introduced coding improvements. The latest version continues to excel in coding capabilities, demonstrating superior performance across various benchmarks including AIDER Polyglot.

Beyond coding, the model has achieved notable improvements in academic and reasoning tasks. Google reports that the 06-05 version delivers “top-tier performance on GPQA and Humanity’s Last Exam (HLE),” two highly challenging benchmarks specifically designed to evaluate a model’s capabilities in mathematics, science, knowledge comprehension, and logical reasoning.

Competitive Performance Metrics

The upgraded model has shown impressive results in competitive evaluations. On LMArena, Gemini 2.5 Pro 06-05 achieved a 24-point Elo score increase, reaching 1470 points. Even more notably, the model demonstrated a 35-point Elo jump on WebDevArena, where it now leads with a score of 1443 points.

Addressing User Feedback

Google has actively responded to user feedback regarding previous model updates. Some users had reported performance declines in areas outside of coding when comparing recent versions to the earlier 03-25 model. The company has addressed these concerns in the 06-05 version by implementing improvements to “style and structure,” enabling the model to produce more creative outputs with better-formatted responses.

Availability and Implementation

While still in preview status, the upgraded Gemini 2.5 Pro is currently being rolled out through multiple channels. Developers can access the model via the Gemini API through Google AI Studio and Vertex AI platforms. The update includes cost and latency control features through thinking budgets, a capability previously introduced with the 2.5 Flash model.

Consumer users can also experience the enhanced model through the Gemini app, which is receiving the 2.5 Pro preview upgrade simultaneously with the developer release.

Google has indicated that a stable, generally available version of this upgraded model will be released within approximately two weeks, transitioning from the current preview status to full production availability.